I don’t get to put my scripting chops to work as often as I’d like these days but occasionally there are problems that are best solved by spending some time to automate processes. There is a specific type of report I have to regularly convert from one format to another, and though the process was fairly straightforward with a small team, as we have grown the time this task took grew to be too much. Luckily the report could be exported in multiple formats, including XML and CSV. For this project I opted for the latter.

A CSV (comma-separated values) file is a straight-forward way to organize data, and most modern spreadsheet applications can export to that filetype. Unfortunately these days spreadsheets can contain all sorts of data, including multi-line cells and data that includes the use of commas (which, in a format defined by commas delineating content, can be an issue). Perl excels at reading and modifying text, and luckily with a little elbow-grease I could make it work for my needs.

While there were many tools available to help me in this task, I finally settled on the Text::CSV_XS perl module, which provides a significant speed increase over older modules. This post isn’t strictly a how-to for computer scripting, but rather a collection of things to watch out for that I encountered working on this project.

I started by defining the binary module constant – this told CSV_XS to handle new-line characters as part of an existing field rather than new content, when properly quoted. Using this flag is essential if your data contains any extended character like newlines or UTF symbols.

my $csv = Text::CSV_XS->new ({ binary => 1 });

In addition to the above, there’s another necessary step when calling these functions if your content contains newlines; instead of using a standard parse call, we use the new command getline, which handles line breaks smoothly.

my $line = $csv->getline ($rawdata));

(Obviously the above would be put into a loop if you’re reading more than one line from a file)

Another issue I ran into, stemming from having both the Text::CSV and Text::CSV_XS documentation pages open, was retrieving the data; CSV_XS puts the return of getline in a different format than CSV does, and that’s addressed by mapping the output to a new array for ease of reference:

my @columns = @$line;

Now all of the separate columns can be read or modified as any standard perl array. With all of the above figured out, I was able to run through some tests and automate the report conversion process, reducing a multi-hour task (with our increased team size) down to just a few minutes. The work will continue to be scalable, meaning it will continue to be a time-saver, no matter how large our team gets.

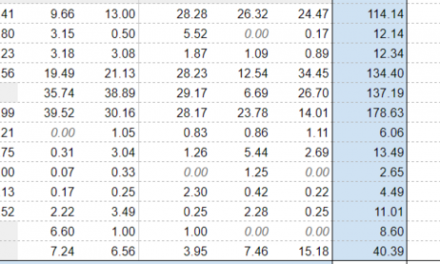

Through this process I also investigated the Math::Round package, which has an impressive amount of number-rounding options.