Recently I wrote a relatively non-technical review of some of the woes I’ve recently faced with my in-home server. Unfortunately, after another week of dead-ends and false-starts, I’m nowhere closer to figuring out what could be the cause of my server’s problem. This post will be more of a deep dive into everything I’ve tried, the logic behind those steps, and all hopefully without much frustration seeping through the keyboard.

Server History

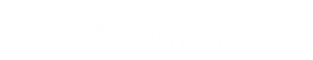

First used for network management (DHCP/DNS), website hosting (Apache), and several niche business applications (NextCloud), as well as local shared file storage (accessed through NFS/Samba), the home-built server is running on an ASUS motherboard (model number TBD), quad-core Intel i7, and 16 GB of RAM. A PERC 6/i RAID card powered four 2TB WD RED drives in RAID 5 (with one as a hot spare), the OS was Slackware 14.2 (with some packages upgraded to -current), and all was right with the world.

Just under a year ago I decided that I wanted to upgrade the hard drives and also expand my ability to run virtual machines, by installing VMware 6.7 as the base OS. Doing some digging, it looked like my little 6/i card wouldn’t handle disks bigger than 2TB, so I started doing my homework.

The nearest card to the 6/i that could support RAID 10 and 6TB HDDs was the PERC H700, which I happily picked up off of eBay, and set about installing my new system, after making a block-level backup of the old server’s contents.

Unfortunately several days after installation the system hanged, and would continue lock up without apparent cause or schedule somewhere between every few hours and every few days. Not being particularly pleased with a server that wouldn’t stay on, I examined the symptoms.

Lock-Ups

When the server became unresponsive, it appeared to be a full-computer crash that did not result in a poweroff or reboot: both the VMs and host OS would fall off the network (no response via ping), a keyboard plugged in would not display a NumLock light (and was otherwise unresponsive), and no keys would enter the VMware configuration menu visible (but frozen) on the monitor. The hard drives seemed to be under slight load, but with no way to interact with the system, I could only hard-reset it.

These same symptoms present themselves to this day, even after each troubleshooting step I’ll outline below. To say that it’s frustrating is an incredible understatement—though virtual appliances isn’t quite my bailiwick, having been a server engineer for nearly 20 years means coming across a personal issue that I can’t suss out even after repeated attempts at troubleshooting makes my stress levels rise dramatically.

Troubleshooting

First believing the issue to lie within the RAID card, I ordered a replacement H700. These aren’t the newest cards around, and I had heard stories of them failing in the past. Since VMware was hosted on the same datastore managed by the card, it made sense to me that if the card failed the entire server would crash as well. With the new RAID card installed, the problem continued.

Next I thought to isolate the VMware installation from the RAID card itself, to try and get logging data after a crash, and hopefully to retain some measure of interactivity even if the virtual machines went down. I did this by adding a small SSD to the server, connected directly through the onboard SATA ports, and reinstalled VMware 6.7 there. Sadly, lockups would still take out the entire system. This lead me to believe it was a problem with VMware itself, or some misconfiguration therein.

A useful and powerful suite of utilities made for virtual guests is collectively called VMware Tools. There aren’t packages available specifically for Slackware Linux so I previously made some small workarounds with a Debian install file. Believing that perhaps some connection between the guest OS and VM host was to blame, I then set about uninstalling VMware Tools from my server VM. For good measure I left the server guest powered off, but eventually VMware locked up again. This told me it had little or nothing to do with the guest.

After doing some digging on the VMware Hardware Compatibility List, I learned that the latest version of VMware that supported my H700 card was 6.0—some years older than the shiny new 6.7 I was using. Thinking to myself that it could be a RAID controller/VMware mismatch, I ordered a new card, this time an LSI 9260-8I SGL, which was on the VMware HCL. Thinking I finally had the issue licked, I installed the card and set about re-copying my server back to the (newly-initialized) RAID array. The server froze again, two days into the three-day transfer.

Starting to run out of ideas, and hair to pull out, I ran a comprehensive MemTest86 test suite against my RAM, which passed with flying colors in 12 of 12 tests. MemTest does not mess around, and the tests were both rigorous and thorough—my RAM was (is) good.

With more internet searches and reading of various message boards, I decided to start with basics, and look at Motherboard BIOS updates. Sure enough there was an update newer than the one I had installed, even though it was marked as a 2014 release. After installing the new BIOS and making sure everything went through smoothly, I sought to upgrade the firmware and BIOS on my new LSI card, just for good measure. For some reason however I was unable to boot into the LSI controller configuration page, no matter how many times I pressed ^H at system boot. Shrugging, I attempted to re-copy my server back over yet again. A day and a half in, it froze anew.

Looking Ahead

I readily admit I’m at my wit’s end. After the purchase of numerous RAID cards, different configurations of software and hardware, I am still unable to keep my server running smoothly. With nearly 8TB of data to transfer back every time I recreate the RAID array, there’s about a three-day lag between me trying something and finding out whether or not it worked.

Looking at the money I’ve spent upgrading/troubleshooting this box, and what it would cost to create a new server out of whole cloth, I’m quickly approaching the point where the latter makes more sense than continuing the former. What really strikes at me though is my to-date inability to pinpoint exactly what is failing and why—my worry is that if I’m not able to pin it down, new server components will just exhibit the same issue because I’m not addressing whatever underlying cause is really at the root of the issue.

Anyone who has experience with VMware, particularly troubleshooting freezing servers, I ask that you please send me an email and let me know your thoughts; this has been a truly aggravating experience and one I would like to see behind me. Thank you for your time and attention, and hopefully your expertise as well.